I’ve been deploying a Cisco UCS Chassis with multiple Cisco B230 M2 Blades. Yet the uplinks switches of the Fabric Interconnect are medium-Enterprise sized Switches, and not some Nexus 5K or better. In a vSphere 5.0 cluster designs you add one or more NICs to the vMotion interface. With the enhancements of Sphere 5.0 you can combine multiple 1G or 10G network cards for vMotion, and get better performance.

Duncan Epping wrote on the 14th December 2011 on his site

[quote]”I had a question last week about multi NIC vMotion. The question was if multi NIC vMotion was a multi initiator / multi target solution. Meaning that, if available, on both the source and the destination multiple NICs are used for the vMotion / migration of a VM. Yes it is!”[/quote]

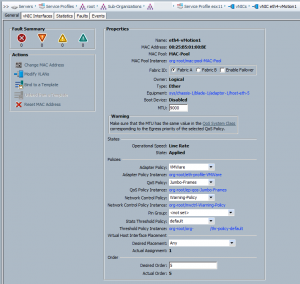

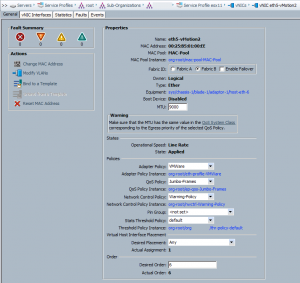

I was a bit worried by having my ESXi 5.0 vMotion traffic go up the Fabric Interconnect from my source Blade, across the network switches and back down the Fabric Interconnect and the target Blade. I decided to create two vmkernel port for vMotion per ESXi, and segregate them in two VLAN. Each VLAN is only used inside one Fabric Interconnect.

vNIC Interface eth4 for vMotion-A on Fabric A (VLAN 70)

vNIC Interface eth5 for vMotion-B on Fabric B (VLAN 71)

And now let’s try this nice configuration.

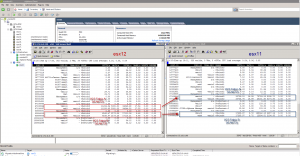

The VM that would be used for testing purposes is a fat nested vESX with 32 vCPU and 64GB of memory (named esx21). It is vMotion’ed from esx12 (Source network stats in Red) towards esx11 (Target network stats in Blue).

The screenshot speaks for itself… we see that the vMotion uses both NICs and VLANs to transfer the memory to esx11. It flies at a total speed of 7504MbTX/s to 7369MbRX/s in two streams. One stream cannot pass the 5400Mb/s rate, because of the limitation of the Cisco 2104XP FEX and the 6120XP Fabric Interconnect. Each 10Gb link is used by two B230 M2 blades.

If you want to learn how to setup Multi-NIC vMotion, check out Duncan’s post on the topic.

Thanks go to Duncan Epping (@duncanyb) and Dave Alexander (@ucs_dave) for their help.