In this Post, I will describe how I have come about in deploying a ScaleIO Software-Defined Storage in the Home Datacenter. Over the course of 2016, I have upgraded my clusters from VMware Virtual SAN Hybrid (Flash for Caching Tier and SAS Enterprise disks for Capacity Tier) to an All Flash Tiering. This has released Multiple 4TB SAS Enteprise disk from the vSAN config. Rather than remove these from the hosts, I decided to learn and test the Free and Frictionless edition of DellEMC ScaleIO.

My ScaleIO design crosses the boundaries of three VMware vSphere Clusters, and is hosted across eight Tower case servers in the Home Datacenter. In a normal production ScaleIO cluster, the recommendation is to have a minimum of 6 disk drivers per ScaleIO Data Server (the servers shading the storage). As you will see, in my design I spread the SAS Enterprise disks across the eight servers.

I’m not going to cover the definition of Protection Domains or Storage Pools in this article, but for this design, I have a single Protection Domain (pd1) with a single Storage Pool which I named SAS_pool. I did device the Protection Domain into three separate Fault Sets (fs1, fs2 and fs3), so as to spread failures across the hosts based on the power phase use in my datacenter.

I’ve run ScaleIO across my cluster for 10 months for some specific workloads that I just could not fit or did not want to fit on my VMware vSAN All-Flash environment.

Here is a large screenshot of my ScaleIO configuration as it’s re-balancing the workload across the hosts.

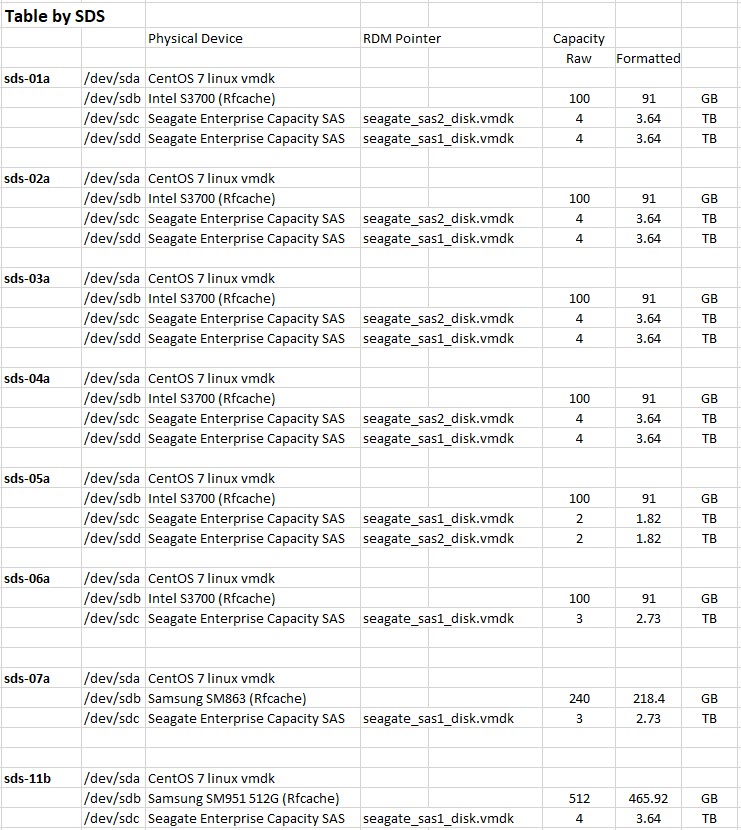

Each ScaleIO Data Server (SDS) was a CentOS 7 VM running on the ESXi and had two or three physical devices attached to it using RDM. Each SDS had a SSD device for the RFcache (Read Cache) and a single or dual SAS disk drive.

At the peak this deployment, the ScaleIO config had 41.8TB Usable Storage. I set the Spare Capacity at 8TB, leaving 34.5TB usable storage. With the double parity on the storage objects, I could only store 17.2TB of data to my VMs and my vSphere hosts.

Over the past 10 month of using ScaleIO, I’ve found two main limitations.

- The ScaleIO release cycle, and even more so for people using the Free & Frictionless version of ScaleIO. The release cycle is out of sync with the vSphere release. Some version are only released to Dell EMC customer with support contracts, and some version take between 6 and 8 weeks to move from the restricted access to a public access. At the end of March 2017, there was no version of ScaleIO that supports vSphere 6.5.

- Maintenance & Operations. As I wanted or needed to upgrade an ESXi host with a patch, a driver change or install a new version of NSX-v, I had to plan the power off the SDS VM running on the ESXi host. You can only put a single SDS in a planned maintenance mode per Protection Domain. So only one ESXi could be patched at a time. A simple cluster upgrade process with a DRS backed network, would now take much longer require more manual steps, put the SDS VM in maintenance mode, shutdown the SDS VM (and take the time to patch the Linux in the SDS VM), putting the host in maintenance mode, patching ESXi, restarting ESXi, exit maintenance mode, restart the SDS VM, exit the ScaleIO Maintenance mode, wait for the ScaleIO to rebuild the redundancy and move to the next host.

I’ve now decommissioned the ScaleIO storage tier as I needed to migrate to vSphere 6.5 for some new product testing.