In my previous post, I described the building of two Linux virtual machines to benchmark the network. Here are the results.

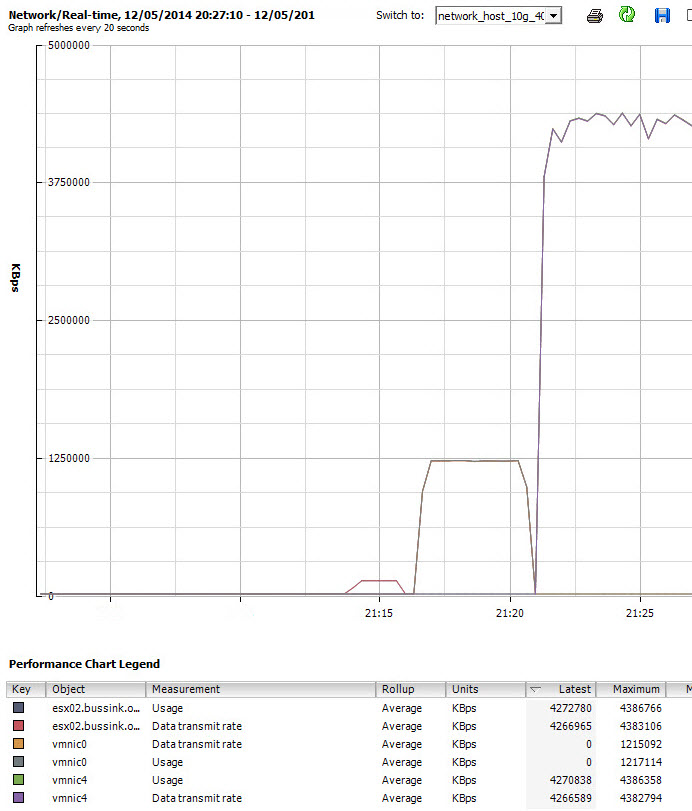

The first blip, is running iperf to the maximum speed between the two Linux VMs at 1Gbps, on separate hosts using Intel I350-T2 adapters.

The second spike (or vmnic0), is running iperf to the maximum speed between two Linux VMs at 10Gbps. The two ESXi hosts are using Intel X540-T2 adapters.

The third mountain (or vmnic4) and most impressive result is running iperf between the Linux VMs using 40Gb Ethernet. The two ESXi hosts are using Mellanox ConnectX-3 VPI adapters.

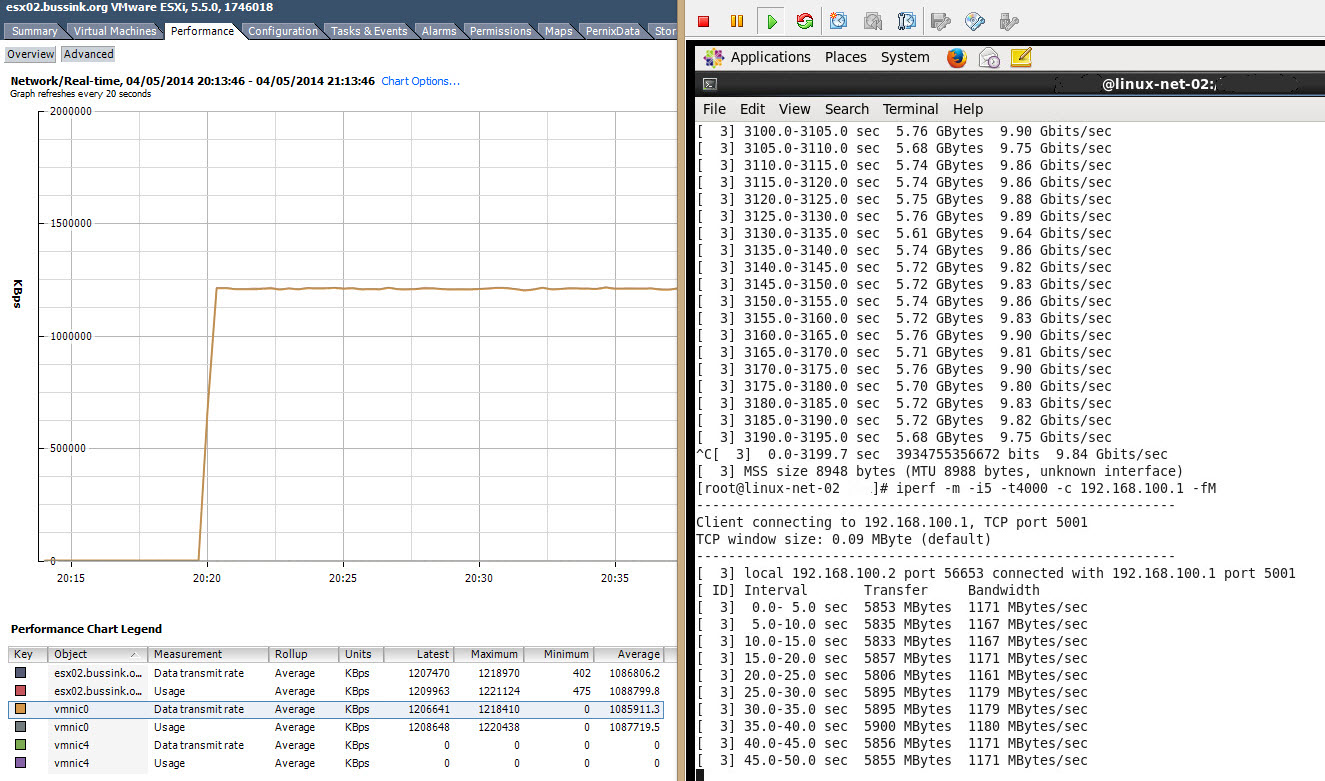

The Homelab 2014 ESXi hosts, uses a Supermicro X9SRH-7TF come with an embedded Intel X540-T2. We can more closely see the results of the iperf test at 10Gbps in the following picture.

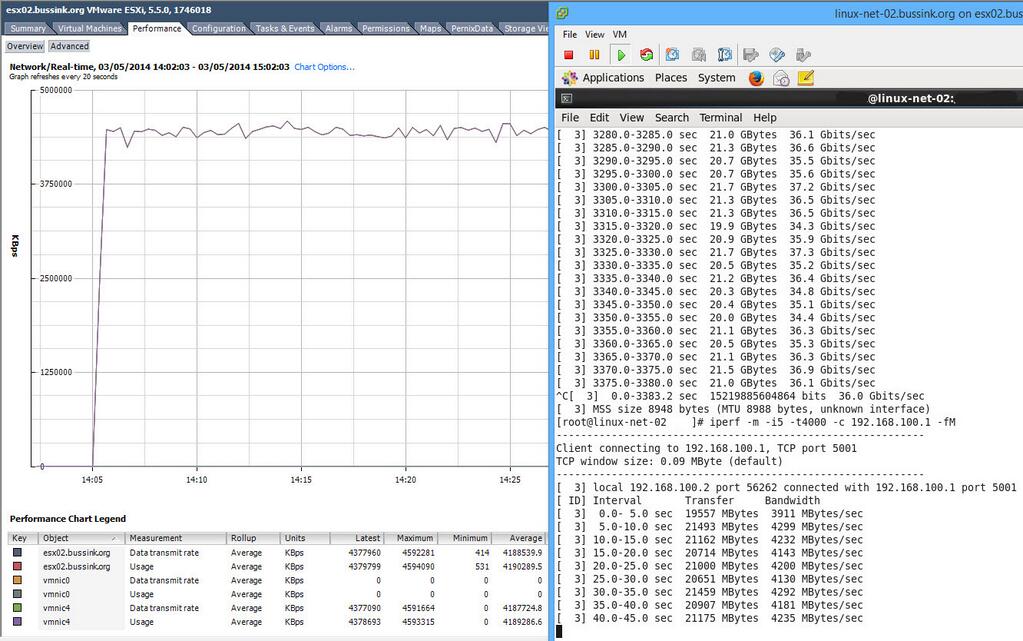

I also got last summer from Ebay, a set of Mellanox ConnectX-3 VPI Dual Adapters for $300. These cards support InfiniBand 40Gb/s and 56Gb/s, and Ethernet at 10Gb/s and 40Gb/s. By default, vSphere 5.5 recognizes these adapters as 40Gb Ethernet adapters. And I really wanted to test these adapters at 40Gb Ethernet… and the results are great. I can push upto 37.3 Gbits/sec thru a single 40Gb Ethernet link, or 4299 MBytes/sec. Just have a peak at the following screenshot.

I guess having 40Gb Ethernet for vMotion is too fast… The vMotion of a 12GB VM takes 15-16 seconds, of which only 3 seconds are used for the memory transfer, the rest is the memory snapshot, processes freeze, cpu register cloning and the rest.

All the test run at 10Gb Ethernet and 40Gb Ethernet where done with Jumbo Frames. For 40Gb Ethernet it makes real (x 2.5) difference in bandwidth.

This was a fun piece to lab in the homelab.