In this first post, I will attempt to describe the different components of the NSX Advanced Load Balancer (ALB). Following the purchase of Avi Networks by VMware in June 2019, the product Avi Vantage has been renamed as NSX Advanced Load Balancer. If you attempt to deploy the latest release 18.2.7, you will notice it still has the Avi Network branding. This will change in the near future.

NSX Advanced Load Balancer is aSoftware Defined solution that provides Application Delivery Services in an automated elastic deployment. It has an in-build best of breed operational dashboard for advanced analytics and reporting, it’s completely API driven, and supports a wide range of infrastructure and cloud providers. In this series of blogs, I will focus on the vSphere integration in my home datacenter.

Version 18.2 of the product works over distributed virtual port groups that belong to both Virtual Distributed Switch (regular VLAN networks) and Logical Switches (NSX-V VXLAN based or NSX-T GENEVE networks) as it is agnostic of underlying network infrastructure. Integration in an NSX-T network is possible today, but still requires some manual configuration steps. The automation of this process will come in a future release of NSX ALB.

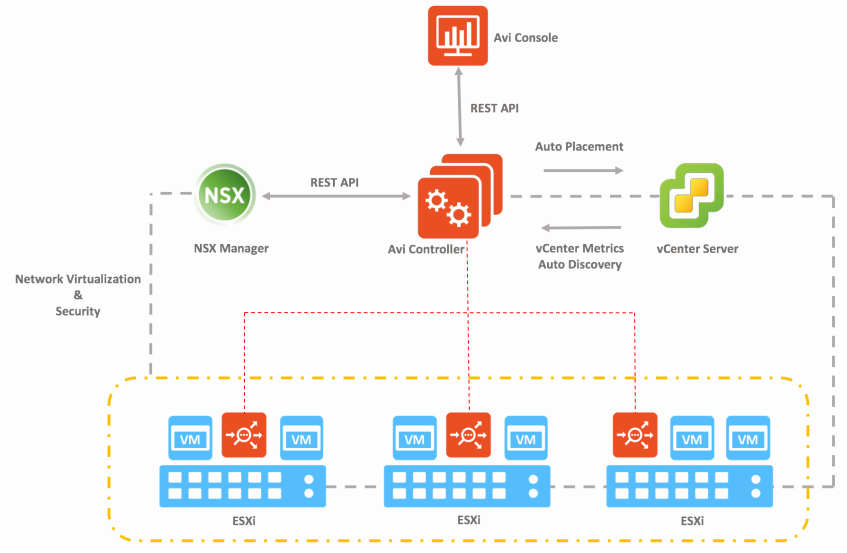

The management plane is composed of one or three Controllers, and the data plane is composed of the Service Engines (SE).

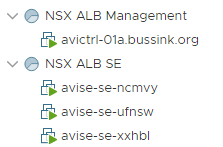

The Controller is the central repository for the configurations and policies, it manages the full lifecycle of the Service Engines (creation, control and deletion). The Controllers run on a dedicated virtual machines. I’ve used a Controller that is integrated in my vSphere infrastructure to automatically deploy and configure the Service Engines across the selected cluster.

The Controllers have the recommended minimum requirements of 24GB RAM, 8 vCPU per node and 128GB disk. The reason to increase the size of the appliance settings above the previous requirements, depends on the data analytics and the amount of the flows. https://avinetworks.com/docs/latest/avi-controller-sizing/

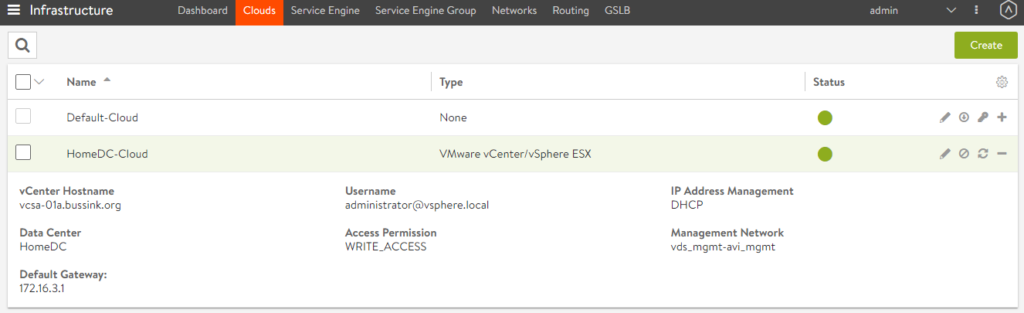

Once the Controllers are deployed, we define a Cloud Infrastructure. Here I have configured my vCenter as the target. With administrator credentials, the Controller will be able to provision the required Service Engines on the cluster.

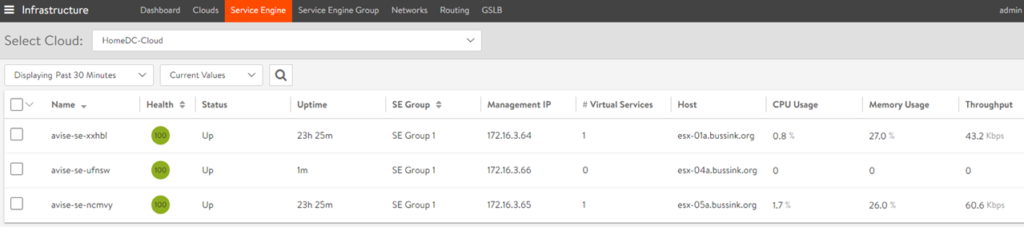

The Service Engines are lightweight data plane engines that distribute the connections based on Load-Balancing algorithms or (HTTP/S) headers. These Service Engines do the Load-Balancing in front of the back-end servers and also execute all the data plane Application Delivery Controls operations, such as health Monitoring and test the performance of the back-end servers, Persists request to back-end servers, Caches response content for potential re-use, Protects against security threats (DoS, suspicious client IPs), Delivers high performance web security with iWAF and Offloads SSL decryption from back-end servers; re-encrypts if required.

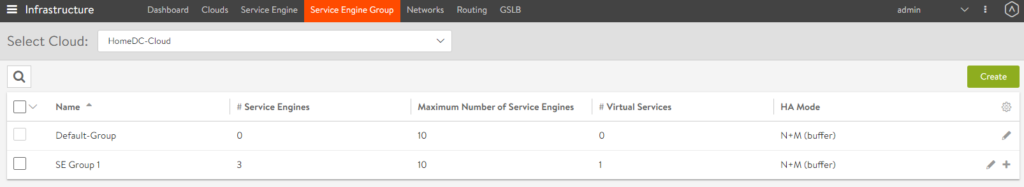

Service Engines are then assembled in a Service Engine Group, just like vSphere hosts are part of a cluster.

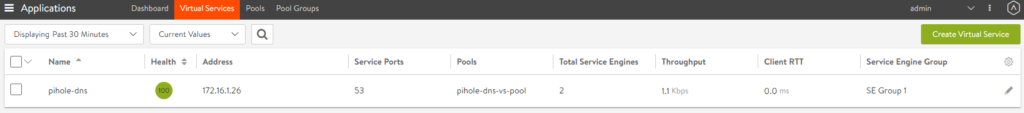

When you create a Virtual Service for a Load-Balance, this service is deployed across the Service Engine Group.

In the next blog post, I will cover more details of the configuration and the deployment of a simple Load Balanced workload.