Okay, the original title was going to be ‘InfiniBand in the lab… who can afford 10/40 GbE’. I’ve looked in the past at 10GbE switches, and nearly pulled the trigger a few times. Even now the prices of the switches like the Netgear Prosafe or Cisco SG500X are going down, the cost of the 10GbE Adapter is still high. Having tested VSAN in the lab, I knew I wanted more speed for the replication and access to the data, than what I experienced. The kick in the butt in network acceleration that I have used is InfiniBand.

If you search on ebay, you will find lots of very cheap InfiniBand host channel adapters (HCA) and cables. A Dual 20Gbps adapter will cost you between $40 and $80 dollars. The cables will vary between $15 and upto $150 depending of the type of cables. One of the interesting fact is that you can use InfiniBand in a point-to-point configuration. Each InfiniBand network needs a Subnet Manager, This is a configuration for the network, akin a Fabric Channel Zoning.

InfiniBand Data Rates

An InfiniBand link is a serial link operating at one of five data rates: single data rate (SDR), double data rate (DDR), quad data rate (QDR), fourteen data rate (FDR), and enhanced data rate (EDR).

- 10 Gbps or Single Data Rate (SDR)

- 20 Gbps or Dual Data Rate (DDR)

- 40 Gbps or Quad Data Rate (QDR)

- 56 Gbps or Fourteen data rate (FDR)

- 100Gbps or Enhanced data rate (EDR)

- In 2014 will see the announcement of High data rate (HDR)

- And the roadmap continues with next data rate (NDR)

There is a great entry InfiniBand on wikipedia that discuss in larger terms the different signaling of InfiniBand.

InfiniBand Host Channel Adapters

Two weeks ago, I found a great lead, information and that pushed me to purchased 6 InfiniBand adapters.

3x Mellanox InfiniBand MHGH28-XTC Dual Port DDR/CX4 (PCIe Gen2) at $50.

3x Mellanox InfiniBand MHGH28-XTC Dual Port DDR/CX4 (PCIe Gen2) at $50. 3x Mellanox InfiniBand MCX354A-FCBT CX354A Dual Port FDR/QDR (PCIe Gen3) at $300.

3x Mellanox InfiniBand MCX354A-FCBT CX354A Dual Port FDR/QDR (PCIe Gen3) at $300.InfiniBand Physical Interconnection

Early InfiniBand used copper CX4 cable for SDR and DDR rates with 4x ports — also commonly used to connect SAS (Serial Attached SCSI) HBAs to external (SAS) disk arrays. With SAS, this is known as an SFF-8470 connector, and is referred to as an “InfiniBand-style” Connector.

Cisco 10GB CX4 to CX4 Infiniband Cable 1.5 m

Cisco 10GB CX4 to CX4 Infiniband Cable 1.5 mThe latest connectors used with up to QDR and FDR speeds 4x ports are QSFP (Quad SFP) and can be copper or fiber, depending on the length required.

InfiniBand Switch

While you can create a triangle configuration with 3 hosts using Dual Port cards like Vladan Seget (@Vladan) writes in his very interesting article Homelab Storage Network Speed with InfiniBand I wanted to see how a InfiniBand switch would work. I only invested in the following older Silverstorm 9024-CU24-ST2 that supports only 10Gbps SDR port. But it has 24x of them. Not bad for a $400 switch that supports 24x 10Gbps ports.

In my configuration each Dual Port Mellanox MHGH28-XTC (DDR Capable) will connect to my SilverStorm switch at only SDR 10Gbps speed, but I have two ports from each hosts. I can also increase the amount of hosts connected to the switch, and use a single Subnet Manager and single IPoIB (IP over InfiniBand) network addressing scheme. At the present time, I think this single IPoIB network addressing might be what is important for the implementation of VSAN in the lab.

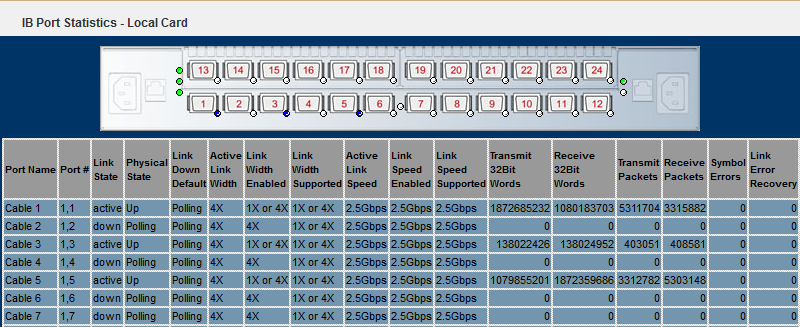

Below you see the IB Port Statistics with three vSphere 5.1 hosts connected (1x cable per ESXi as I’m waiting on a 2nd batch of CX4 cables).

The surprise I had when connecting to the SilverStorm 9024 switch is that it did not have the Subnet Manager. But thanks to Raphael Schitz (@hypervisor_fr) who has successfully with the work & help of others (William Lam & Stjepan Groš) and great tools (ESX Community Packaging Tool by Andreas Peetz @vFrontDE), repackaged the OpenFabrics Enterprise Distribution OpenSM (Subnet Manager) so that it can be loaded on vSphere 5.0 and vSphere 5.1. This vSphere installable VIB can be found in his blog article InfiniBand@home votre homelab a 20Gbps (In French).

The Link states in the screenshot above went to active, once the ib-Opensm was installed on the vSphere 5.1 hosts, the MTU was set and the partitions.conf configuration file written. Without Raphael’s ib-opensm, my InfiniBand switch would have been alone and not passed the IPoIB traffic in my lab.

Installing the InfiniBand Adapters in vSphere 5.1

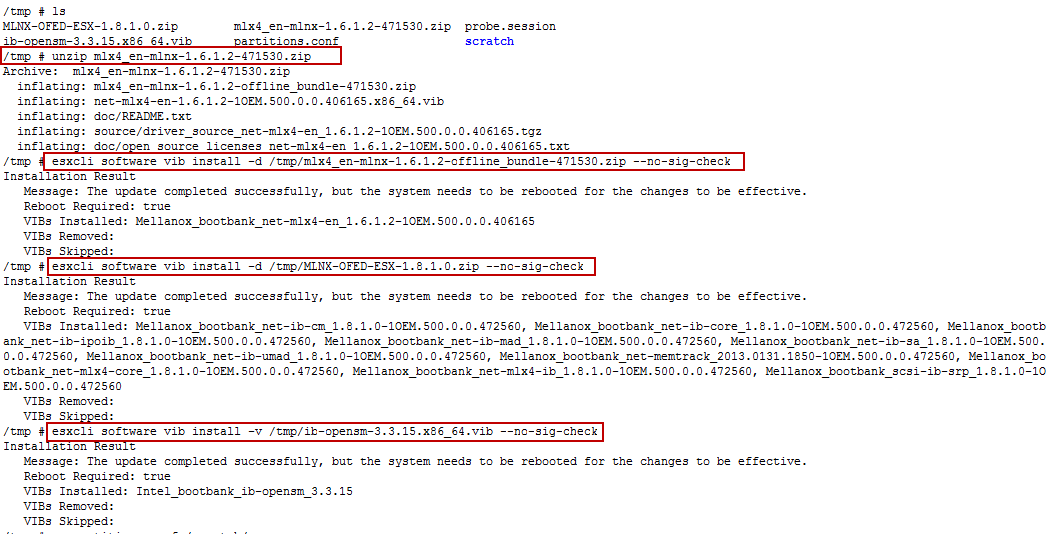

Here is the process I used to install the InfiniBand drivers after adding the Host Channel Adapters. You will need three files. The first is the InfiniBand OFED Driver for VMware vSphere 5.x from Mellanox. The 2nd is the

- VMware’s Mellanox 10Gb Ethernet driver supports products based on the Mellanox ConnectX Ethernet adapters

- Mellanox InfiniBand OFED Driver for VMware vSphere 5.x

- OpenFabrics.org Enterprise Distribution’s OpenSM for VMware vSphere 5.1 packaged by Raphael Schitz

You will need to transfer these three packages to each vSphere 5.x host, and install them using the esxcli command line. Before installing the VMware Mellanox ConnectX dcrive, you need to unzip the file, as it’s the offline zip file you want to supply in the ‘esxcli software vib’ install command. I push all the files via SSH in the /tmp folder. I recommend that the host be put in maintenance mode, as you will need to reboot after the drivers are installed.

The commands are

- unzip mlx4_en-mlnx-1.6.1.2-471530.zip

- esxcli software vib install -d /tmp/mlx4_en-mlnx-1.6.1.2-offline_bundle-471530.zip –no-sig-check

- esxcli software vib install -d /tmp/MLNX-OFED-ESX-1.8.1.0.zip –no-sig-check

- esxcli software vib install -v /tmp/ib-opensm-3.3.15.x86_64.vib –no-sig-check

Careful with the ib-opensm, the esxcli -d becomes a -v for the vib.

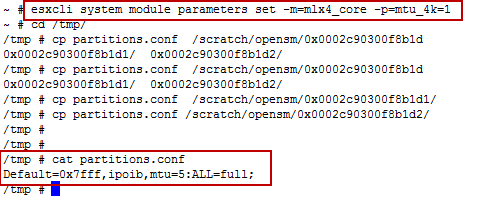

At this point, you will reboot the host. Once the host comes backup, there are two more commands you need to do. One is the set the MTU to 4092, and configure the OpenSM per adapter with the partitions.conf file.

The partitions.conf file is a simple one line file that contains the following config.

[button] Default=0x7fff,ipoib,mtu=5:ALL=full;[/button]

The commands are

- esxcli system module paramters set -m=mlx4_core -p=mtu_4k=1

- copy partitions.conf /scratch/opensm/adapter_1_hca/

- copy partitions.conf /scratch/opensm/adapter_2_hca/

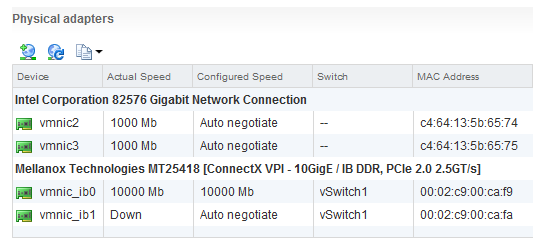

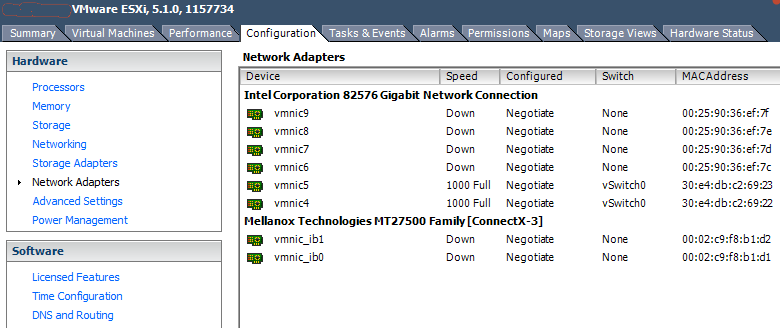

At this point you will be able to configure the Mellanox Adapters in the vSphere Web Client (ConnectX for the MHGH28-XTC)

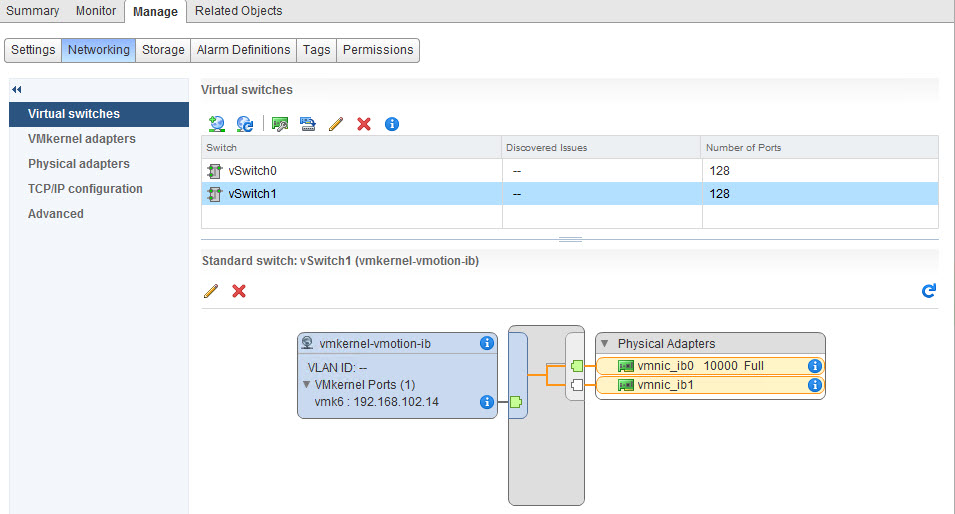

The vSwitch view is as follow

Configure the Mellanox Adapter in the vSphere Clientand (ConnectX3 for the MCX354A-FCBT)

I’m still waiting on the delivery of some QSFP Cable for the ConnectX Adapters. This config will be done in a triangular design until I find a QDR Switch of reasonable cost.

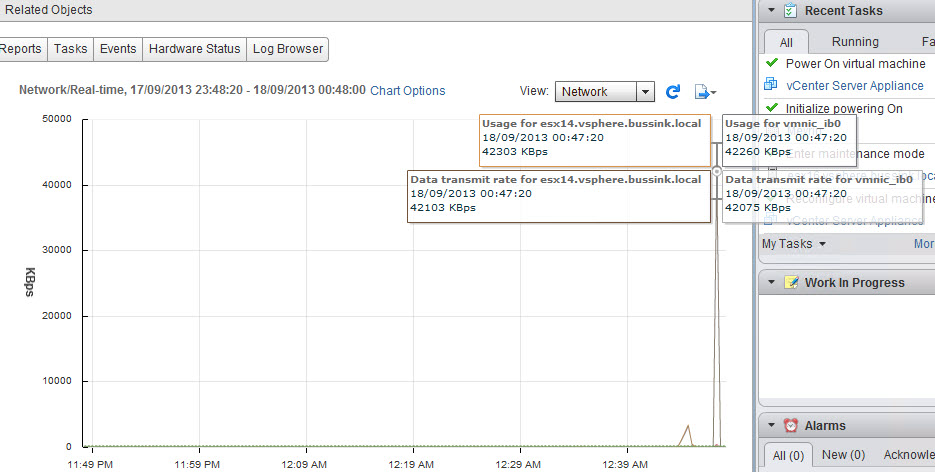

This article wouldn’t be complete without a bench mark. Here is the screenshot I quickly took from the vCenter Server Appliance, that I bumped to 4 vCPU and 22GB that I vMotioned between two hosts with SDR (10Gbps) connectivity.

This is where I’m going to stop for now. Hope you enjoyed it.