Before I start on a blog on how I implemented InfiniBand in the lab, I wanted to make a quick backstory on my lab which is located in the office. I do have a small homelab running VSAN, but this my larger lab.

This is a quick summary of my recent lab adventures. The different between the lab and the homelab, is it’s location. I’m privileged that the company I work for, is allowing me used 12U in the company datacenter. They provide the electricity and the cooling. The rest of what happens inside those 12U is mine. The only promise I had to do, is that I was not going to run external commercial services on this infrastructure.

Early last year, I purchased a set of Cisco UCS C M2 (Westmere processor) Series servers when Cisco announced the newer M3 with Sandy Bridge processors, at a real bargain (to me at least).

I had gotten three Cisco UCS C200 M2 with a Single Xeon 5649 (6-cores @2.5Ghz) and 4GB, and three Cisco UCS C210 M2 with a Single Xeon 5649. At that point I purchased some Kingston memory 8GB dimms to increase each host from to 48GB (6x 8GB), and the last one got all 6x4GB dimms.

It tooks me quite a few months to pay for all this infrastructure.

This summer with the release of the next set of Intel Xeon Ivy Bridge processors (E5-2600v2), the Westmere series of processors are starting to fade from the pricelists. Yet at the same time, some large social networking companies are shedding some equipment. In this I was able to find on ebay a set of 6x Xeon L5639 (6-cores @2.1Ghz), and I have just finished adding them to the lab. I don’t really need the additional cpu resources, but I do want the capability to expand the memory of each server past the original 6 DIMMs I purchased.

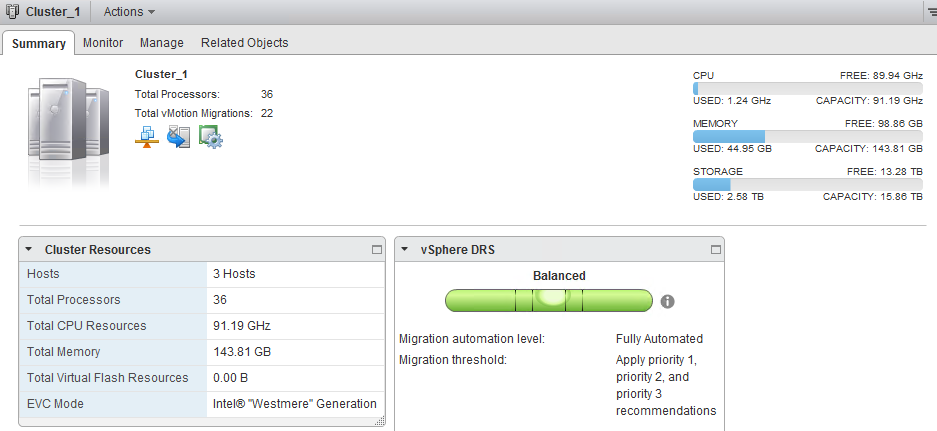

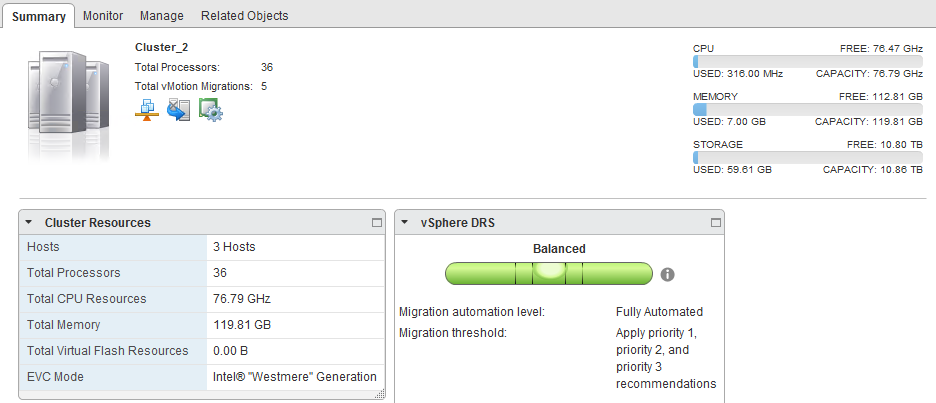

The Lab is composed of two Clusters, one with the C200 M2 with Dual Xeon 5649.

and one cluster with the C210 M2 with Dual Xeon L5639.

The clusters are real empty now, as I have broken down the vCloud Director infrastructure that was available to my colleagues, as I wait for the very imminent release of the vSphere 5.5 edition.

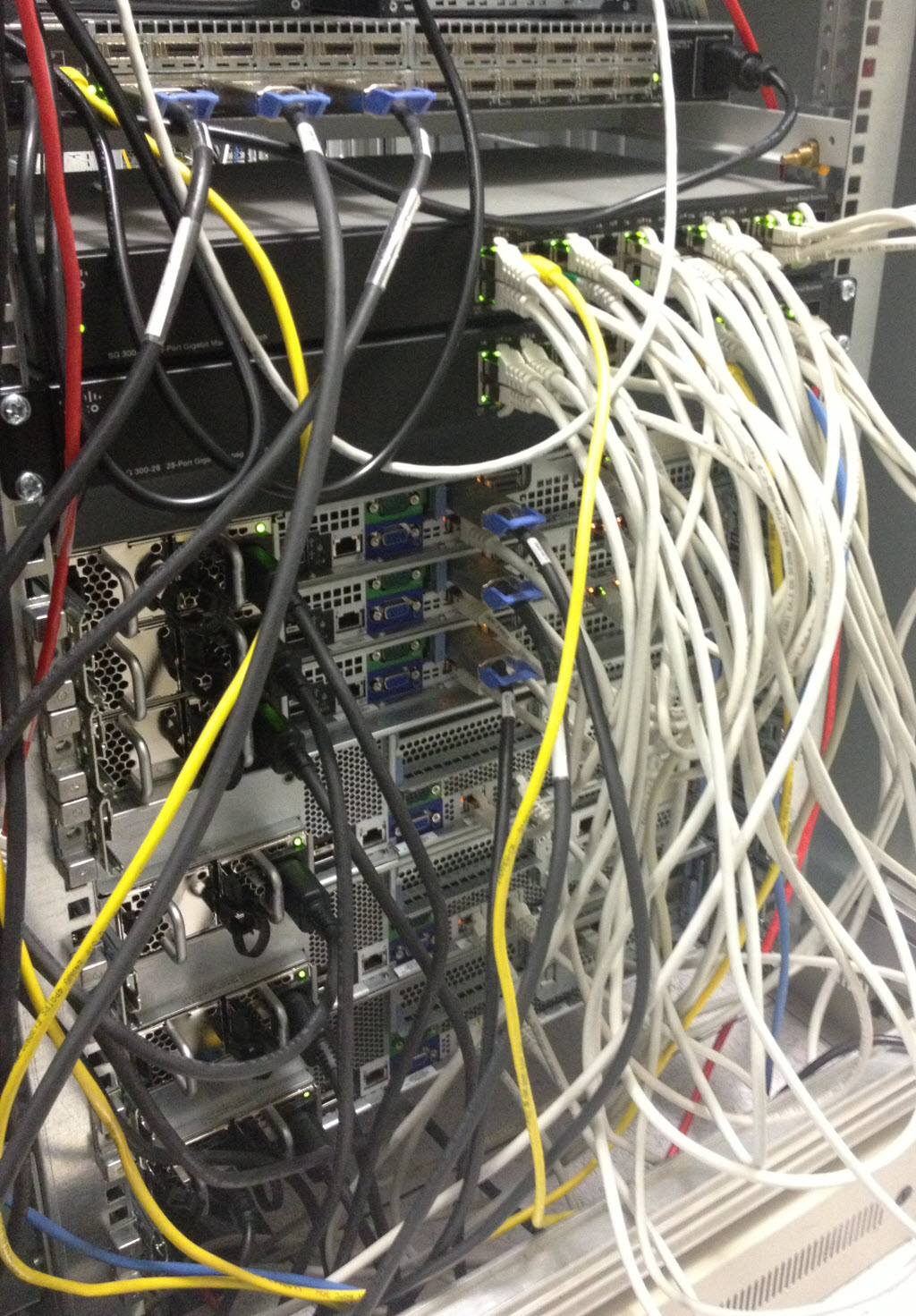

The network is done with two Cisco SG300-28 switches with a LAG Trunk between them.

For a longtime, I have been searching for a faster backbone to these two Cisco SG300-28 switches. Prices on 10GbE switches have come down, and some very interesting contenders are the Cisco SG500X series with 4 SFP+ ports, or the Netgear Prosafe XS708E or XS712T switches. While these switches are just affordable for a privatly sustain lab, the cost of the Adapters would make it expensive. I’ve tried to find an older 10GbE switch or tried to coax some suppliers to give their old Nexus 5010 switches over but not much success. The revelation to an affordable and fast network backbone comes from InfiniBand. Like others, I’ve know about InfiniBand for years, and I’ve seen my share in datacenters left and right (HPC Clusters, Oracle Exadata racks). But only this summer did I see a french blogger Raphael Schitz (@hypervisor_fr) write what we all wanted to have… InfiniBand@home votre homelab a 20Gbps (In French). Vladan Seget (@Vladan) has followed up on the topic and has also a great article Homelab Storage Network Speed with InfiniBand on the topic. Three weeks ago, I took the plunge and ordered my own InfiniBand interfaces, InfiniBand cables and even to try my hand at it, an InfiniBand Switch. Follow me in the next article to see me build a Cheap yet fast network backbone to my lab.

Having had the opportunity to test VSAN in the homelab, I’ve notice that once you are running a dozen virtual machines, you really want to migrate from the gigabit network to the recommended VSAN network speed of 10G. If you plan to just validate VSAN in the homelab, gigabit is great, but if you plan to run the homelab on VSAN, you will be quickly finding things sluggish.