I recently redesigned my network configuration from a single /24 address range to multiple /24 ranges with routing done on my core switch. Part of this change meant shutdown the vSphere cluster, and the storage arrays (Synology) and reassigning new IP addresses and gateways to all of these entities.

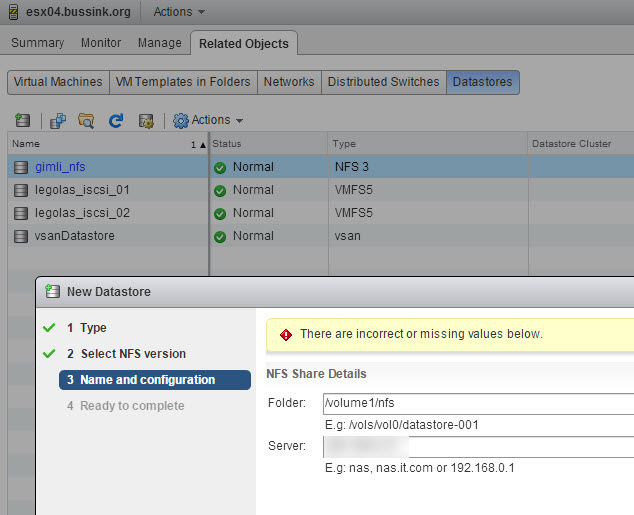

When my hosts and my storage arrays had their new IP addresses and I attempted to re-map my NFS volumes to the Synology, I got a strange error message while attempting to mount my NFS volumes. “There are incorrect or missing values below.”

Another error message “Unable to add new NAS, volume with the label X already exists” was given on the ESXi shell when I attempted the same operations using a SSH session directly on my host.

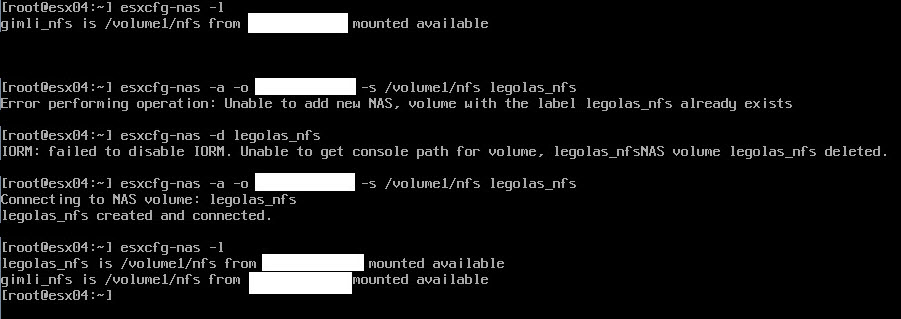

Yet the esxcfg-nas -l command did not return any values.

Well it seems that the old NAS entry is still in the ESXi host, but not listed. To fix this small issue, you need to delete the non-existing NFS mount point and recreate it.

In the next screenshot you see me listing my mount points, attempting to mount a new NFS volume (legolas_nfs), erasing the non-visible entry, and at last adding the new NFS volume.

I hope this can save someone some precious time.