A followup to my adventures of InfiniBand in the lab... and the vBrownbag Tech Talk about InfiniBand in the lab I did at VMworld 2013 in Barcelona .

In this post I will cover how to install the InfiniBand drivers and various protocols in vSphere 5.5. This post and the commands below are only applicable if you are not using Mellanox ConnectX-3 VPI Host Card Adapters or if you have a InfiniBand switch with a hardware integrated Subnet Manager. Mellanox states that the ConnectX-3 VPI should allows normal IP over InfiniBand (IPoIB) connectivity with the default 1.9.7 drivers on the ESXi 5.5.0 install cdrom.

This post will be most useful to people that have the following configuration

- Two ESXi 5.5 hosts with direct InfiniBand host-to-host connectivity (no InfiniBand switch)

- Two/Three ESXi 5.5 hosts with InfiniBand host -to-storage connectivity (no InfiniBand switch and a storage array like Nexenta Community Edition)

- Multiple ESXi 5.5 hosts with a InfiniBand switch that doesn’t have a Subnet Manager

The installation in these configuration is only possible since early this morning (October 22nd at 00:08 CET time), when Raphael Schitz (@hypervisor_fr) has released an updated version of the OpenSM 3.3.16-64, which was compiled in 64bit for usage on vSphere 5.5 and vSphere 5.1.

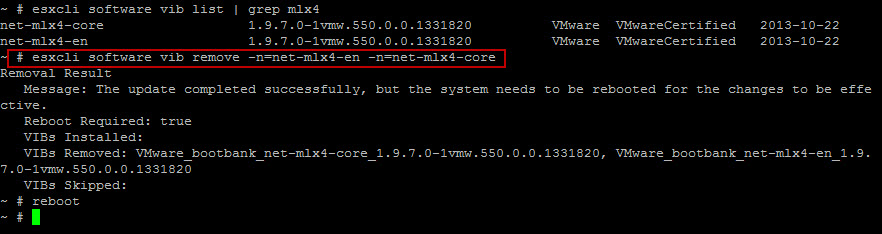

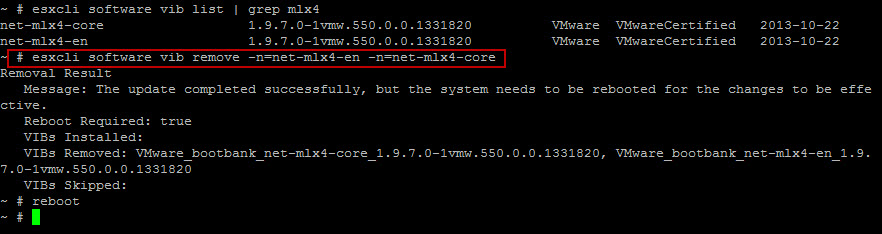

First things first… let’s rip the Mellanox 1.9.7 drivers from a new ESXi 5.5.0 install

Removing Mellanox 1.9.7 drivers from ESXi 5.5

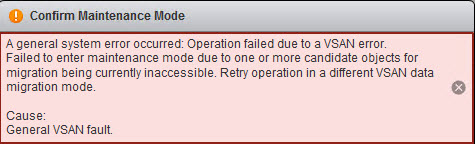

Yes, the first thing to get IP over InfiniBand (for VMkernel adaptaers like vMotion or VSAN) or SCSI RDMA Protocol (SRP) is to remove the new Mellanox 1.9.7 drivers from the newly install ESXi 5.5.0. The driver don’t work with the older Mellanox OFED 1.8.2 package, and the new OFED 2.0 package is pending… Lets cross finger for an early 2014 release.

You need to connect using SSH to your ESXi 5.5 host, and run the following command and you will need to reboot the host for the driver to be removed from the memory.

- esxcli software vib remove -n=net-mlx4-en -n=net-mlx4-core

- reboot the ESXi host

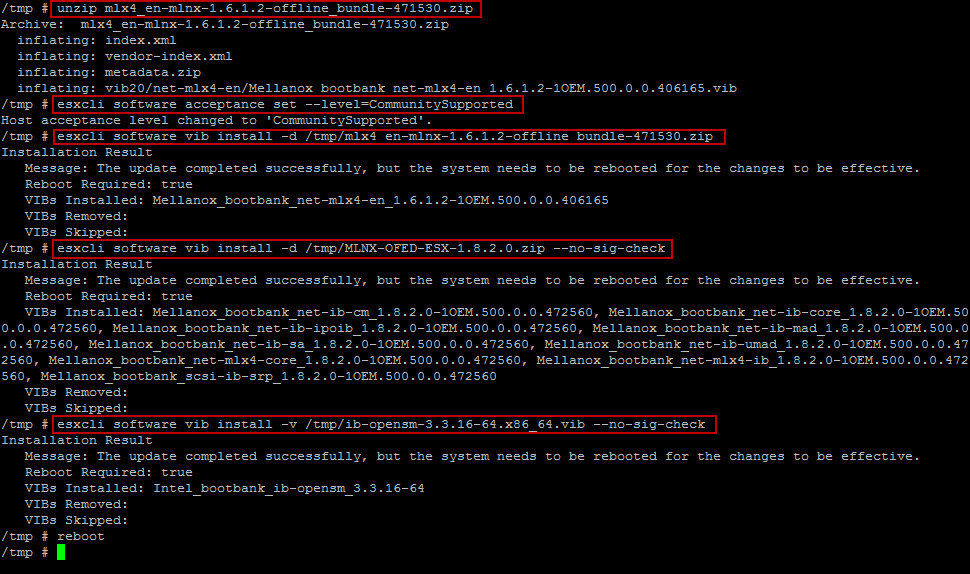

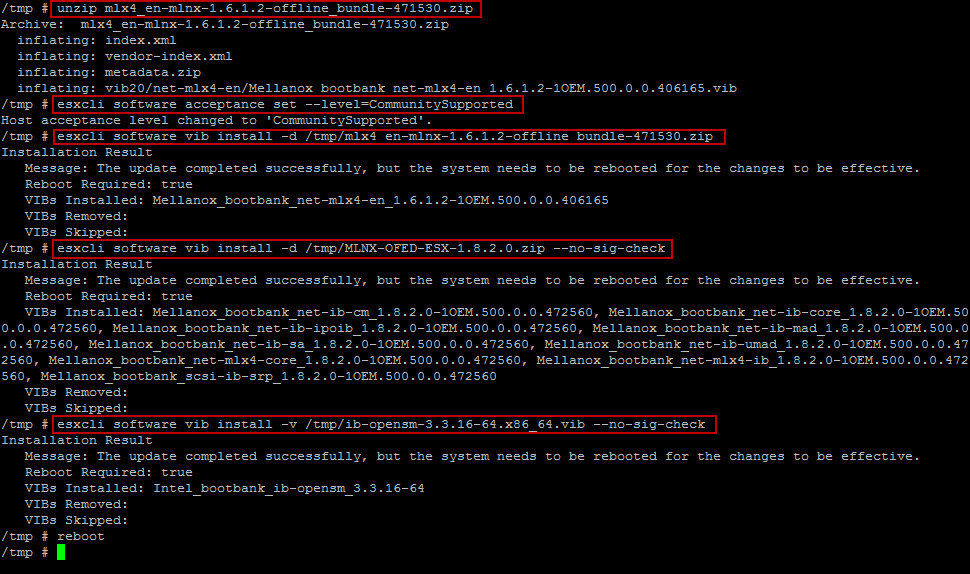

Installing Mellanox 1.61 drivers, OFED and OpenSM

After the reboot you will need to download the following files and copy them to the /tmp on the ESXi 5.5 host

- VMware ESXi 5.0 Driver 1.6.1 for Mellanox ConnectX Ethernet Adapters (Requires myVMware login)

- Mellanox InfiniBand OFED 1.8.2 Driver for VMware vSphere 5.x

- OpenFabrics.org Enterprise Distribution’s OpenSM 3.3.16-64 for VMware vSphere 5.5 (x86_64) packaged by Raphael Schitz

Once the files are in /tmp or if you want to keep a copy on the shared storage, you will need to unzip the Mellanox 1.6.1 driver file. Careful with the ib-opensm-3.3.16-64, the esxcli -d becomes a -v for the vib during the install. The other change since vSphere 5.1, is that we need to set the esxcli software acceptance level to CommunitySupported level, to install some of the drivers and binaries.

The commands are

- unzip mlx4_en-mlnx-1.6.1.2-471530.zip

- esxcli software acceptance set –level=CommunitySupported

- esxcli software vib install -d /tmp/mlx4_en-mlnx-1.6.1.2-offline_bundle-471530.zip –no-sig-check

- esxcli software vib install -d /tmp/MLNX-OFED-ESX-1.8.2.0.zip –no-sig-check

- esxcli software vib install -v /tmp/ib-opensm-3.3.16-64.x86_64.vib –no-sig-check

- reboot the ESXi host

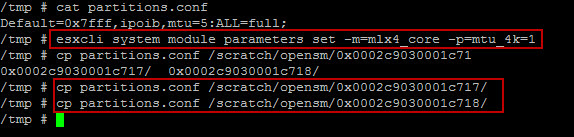

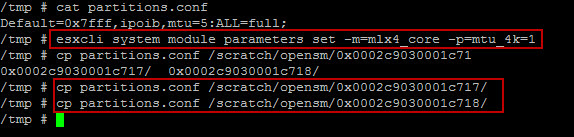

Setting MTU and Configuring OpenSM

After the reboot we have two more commands to pass.

- esxcli system module paramters set -m=mlx4_core -p=mtu_4k=1

- copy partitions.conf /scratch/opensm/<adapter_1_hca>/

- copy partitions.conf /scratch/opensm/<adapter_2_hca>/

The partitions.conf file only contains the following text:

- Default=0x7fff,ipoib,mtu=5:ALL=full;

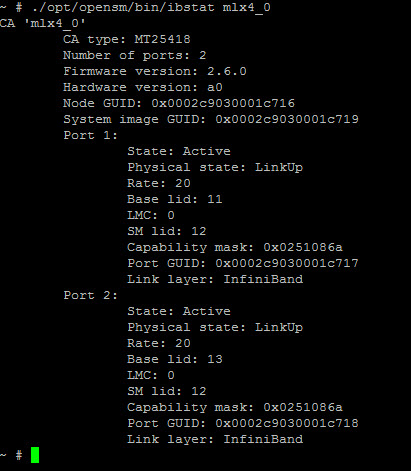

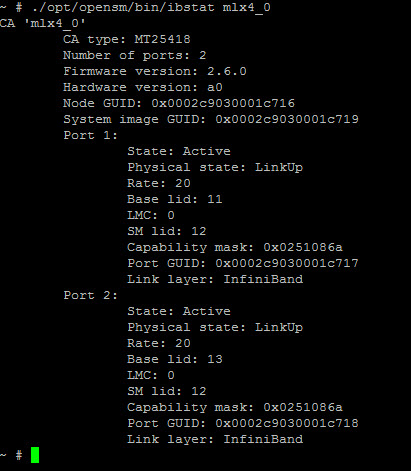

I recommend that you check the state of your InfiniBand adapters (mlx4_0) using the following command

- ./opt/opensm/bin/ibstat mlx4_0

I also recommend that you write down the adapter HCA Port GUID numbers if you are going to use SCSI RDMA Protocol between the ESXi host and a storage array with SCSI RDMA Protocol. It will come in handy later (and in an upcoming post).

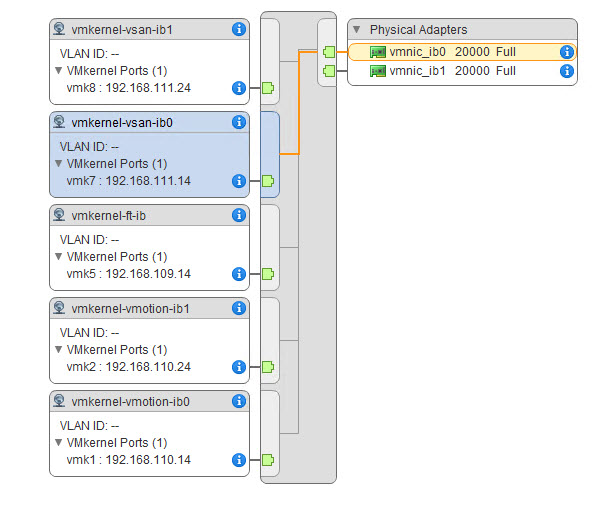

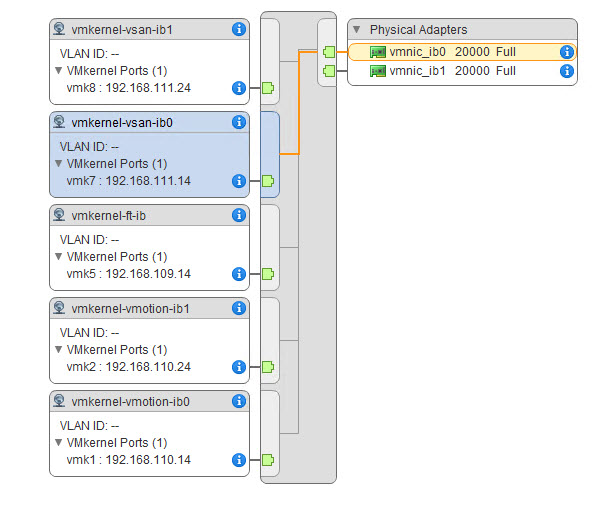

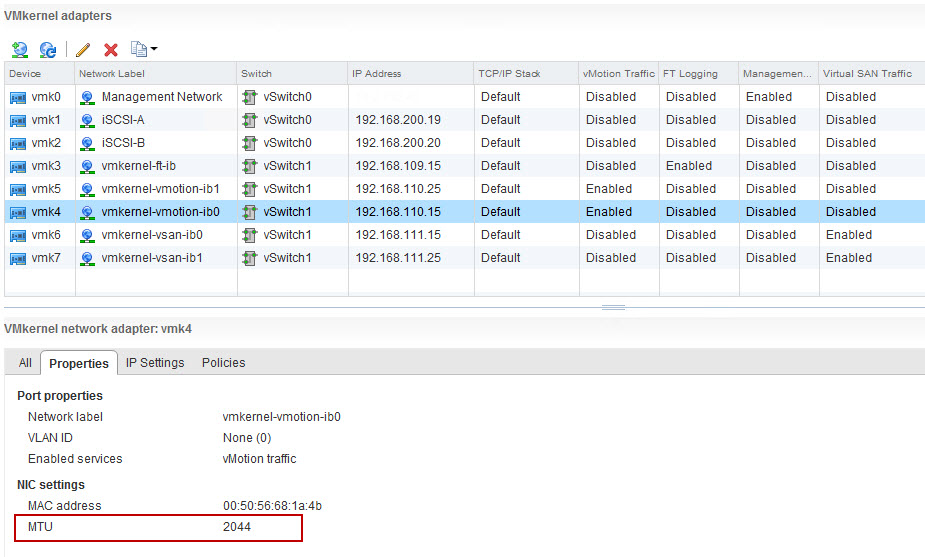

Now you are ready to add the new adapters to a vSwitch/dvSwitch and create the VMkernel adapters. Here is the current config for vMotion, VSAN and Fault Tolerance on a dual 20Gbps IB Adapters (which only costs $50!)

I aim to put the various vmkernel traffics in their own VLANs, but I still need to dig in the partitions.conf file.

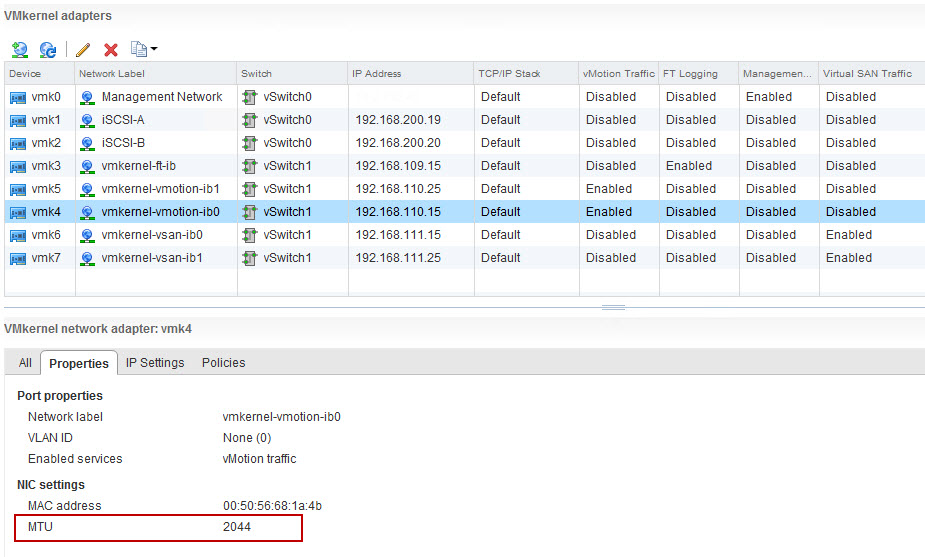

If you have an older switch that does not support a MTU of 4K, make sure you set your vSwitch/dvSwitch to a MTU of 2044 (2048-4 bytes) and the same for the various VMkernel interfaces.

Here is just a Quick Glossary about the various protocols that can use the InfiniBand fabric.

What is IPoIB ?

IPoIB (IP-over-InfiniBand) is a protocol that defines how to send IP packets over IB; and for example Linux has an “ib_ipoib” driver that implements this protocol. This driver creates a network interface for each InfiniBand port on the system, which makes an Host Card Adapter (HCA) act like an ordinary Network Interface Card (NIC).

IPoIB does not make full use of the HCAs capabilities; network traffic goes through the normal IP stack, which means a system call is required for every message and the host CPU must handle breaking data up into packets, etc. However it does mean that applications that use normal IP sockets will work on top of the full speed of the IB link (although the CPU will probably not be able to run the IP stack fast enough to use a 32 Gb/sec QDR IB link).

Since IPoIB provides a normal IP NIC interface, one can run TCP (or UDP) sockets on top of it. TCP throughput well over 10 Gb/sec is possible using recent systems, but this will burn a fair amount of CPU.

What is SRP ?

The SCSI RDMA Protocol (SRP) is a protocol that allows one computer to access SCSI devices attached to another computer via remote direct memory access (RDMA).The SRP protocol is also known as the SCSI Remote Protocol. The use of RDMA makes higher throughput and lower latency possible than what is possible through e.g. the TCP/IP communication protocol. RDMA is only possible with network adapters that support RDMA in hardware. Examples of such network adapters are InfiniBand HCAs and 10 GbE network adapters with iWARP support. While the SRP protocol has been designed to use RDMA networks efficiently, it is also possible to implement the SRP protocol over networks that do not support RDMA.

As with the ISCSI Extensions for RDMA (iSER) communication protocol, there is the notion of a target (a system that stores the data) and an initiator (a client accessing the target) with the target performing the actual data movement. In other words, when a user writes to a target, the target actually executes a read from the initiator and when a user issues a read, the target executes a write to the initiator.

While the SRP protocol is easier to implement than the iSER protocol, iSER offers more management functionality, e.g. the target discovery infrastructure enabled by the iSCSI protocol. Furthermore, the SRP protocol never made it into an official standard. The latest draft of the SRP protocol, revision 16a, dates from July 3, 2002

What is iSER ?

The iSCSI Extensions for RDMA (iSER) is a computer network protocol that extends the Internet Small Computer System Interface (iSCSI) protocol to use Remote Direct Memory Access (RDMA). Typically RDMA is provided by either the Transmission Control Protocol (TCP) with RDMA services (iWARP) or InfiniBand. It permits data to be transferred directly into and out of SCSI computer memory buffers (which connects computers to storage devices) without intermediate data copies.

The motivation for iSER is to use RDMA to avoid unnecessary data copying on the target and initiator. The Datamover Architecture (DA) defines an abstract model in which the movement of data between iSCSI end nodes is logically separated from the rest of the iSCSI protocol; iSER is one Datamover protocol. The interface between the iSCSI and a Datamover protocol, iSER in this case, is called Datamover Interface (DI).